Using Jewelry to Communicate

The face already plays an important role in communication, but a group of UC Davis computer scientists led by Ph.D. student Shuyi Sun is taking this to the next level. The team is designing facial jewelry that can use signals from a person’s facial muscles to send wireless commands to at-home devices like Alexa and Google Home. By reading a user’s conscious and unconscious gestures, the technology has the potential to help silently operate lights or other devices or discreetly send messages to get out of potentially dangerous situations.

The device is comprised of two on-skin sensors covered by jewels and connected to a circuit board through a wire. When a user frowns or makes another gesture, the circuit connects and sends a Wi-Fi signal to an external device to trigger a routine.

“We want to make an interface that is on-skin, seamless, beautiful and non-gender-specific that fits on the face and [enables] a different type of interaction than what is typically used in wearable devices,” Sun said. “We also want to integrate a bunch of different systems together and make it something that recognizes your unconscious gestures and enhance them in some way by technology.”

The face is sensitive, so the device needs to be softer and more malleable than other types of wearable technology. It also needs to be as small enough to sit on the head, and the wires and circuit boards can’t be conductive if they’re touching hair. Balancing these needs while making the device more functional is the next step toward making the technology a reality.

From Class to Conference

The project started in CS associate professor Hao-Chuan Wang’s human-computer interaction course (ECS 289) in fall 2020. Wang suggested that the students combine beauty technology and at-home devices, which got Sun thinking about wearable technology. At first, she and her group thought about a hair extension device that could send a text or record a conversation if it was touched but settled on facial jewelry because they wanted something that could read unconscious signals.

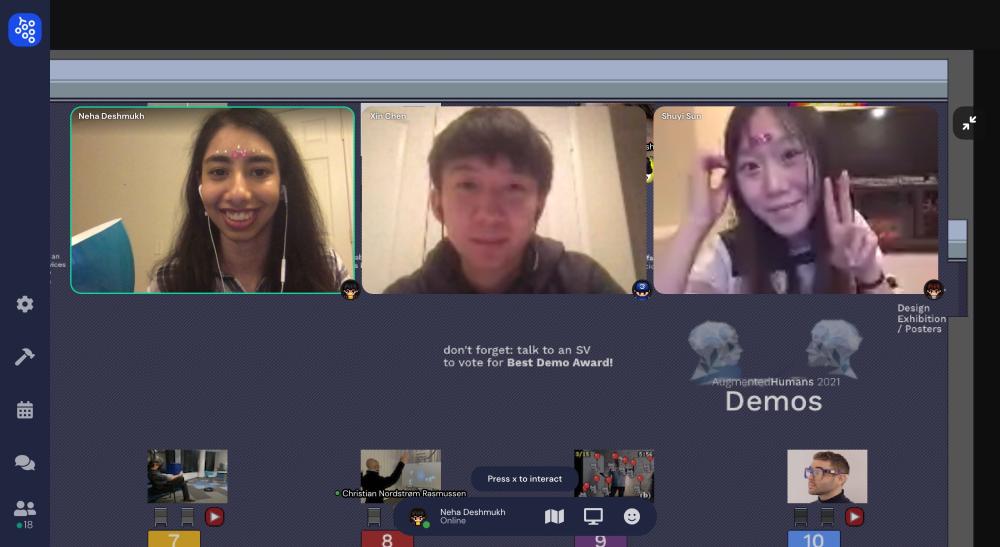

Sun continued the project with her advisor, Design professor Katia Vega. Later that year, Sun and undergraduate students Neha Deshmukh and Xie Chen presented the device and won the Best Demo Award at the 2021 Association of Computer Machinery’s Augmented Humans Conference. The team was able to set their demo apart by coupling a live demonstration with a previously-recorded video.

“I was able to turn on my co-author’s light in her room with my device, and she could send me a message with her device,” she said. “That worked to our advantage because we were on camera, and people could tell that we were not in the same space while watching it happen right in front of them.”

The team has continued working on the design and began user studies this fall. They’re working to improve functionality by expanding the number of signals users can send so they can trigger multiple routines and by incorporating electromyography (EMG) sensing, which can register electrical muscle signals. They’re also making their own silicon and resin-based gems that are customizable and more comfortable to wear. Sun is excited to see the idea keep developing and is inspired by its success to trust and build on the team’s ideas.

“It’s really motivating to be recognized by other people because it tells me that there is something to work on here and that others are interested in this topic,” she said.